Choosing the best method for your organization

Everyone knows businesses run on data. However, you also know that data lives in many sources and has little value on its own. It only becomes a competitive advantage once it’s pooled together, standardized, and securely and consistently available. Only then can you use it to enhance analytics and reporting, machine learning and AI, operational efficiencies, customer relations, and more.

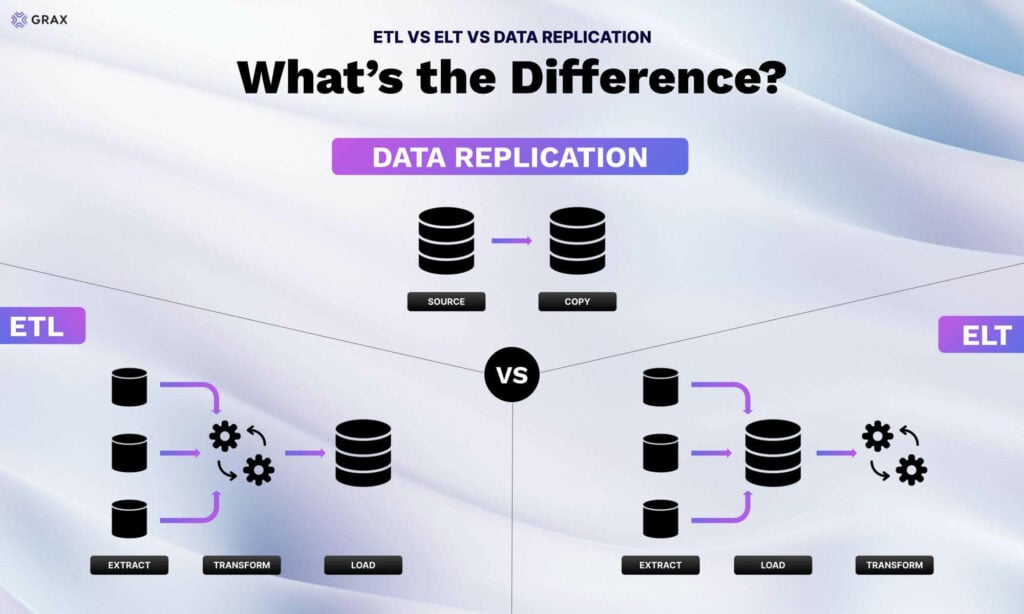

There are 3 main processes for preparing data for downstream use:

- ETL (Extract, Transform, Load)

- ELT (Extract, Load, Transform)

- Data Replication

We’ll define each method, discuss the pros and cons, and help you decide which best suits your needs.

Definitions and Basic Concepts

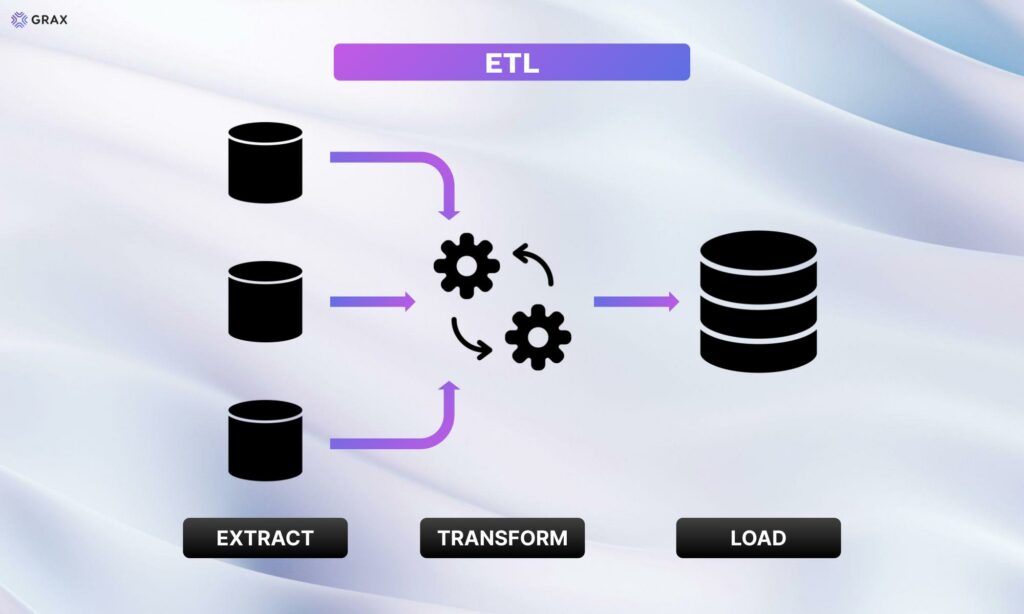

What is ETL?

An ETL pipeline is a set of processes used to extract data from various sources, transform it into a format suitable for analysis and reporting, and load it into a target database or data warehouse.

Process Flow:

- Extraction

Data is gathered from multiple sources, such as databases, cloud storage, APIs, or flat files. The extracted data may come in various formats and structures, such as JSON, CSV, XML, or SQL tables. - Transformation

Data is cleaned, enriched, and formatted to meet the target system’s requirements. Transformation can include a variety of operations, such as filtering out irrelevant data, correcting errors, standardizing formats, aggregating data, and enriching data with additional information. - Loading

Transformed data is loaded into the target system in a structured format. This process may involve batch loading or incremental loading, depending on the volume of data and the update frequency.

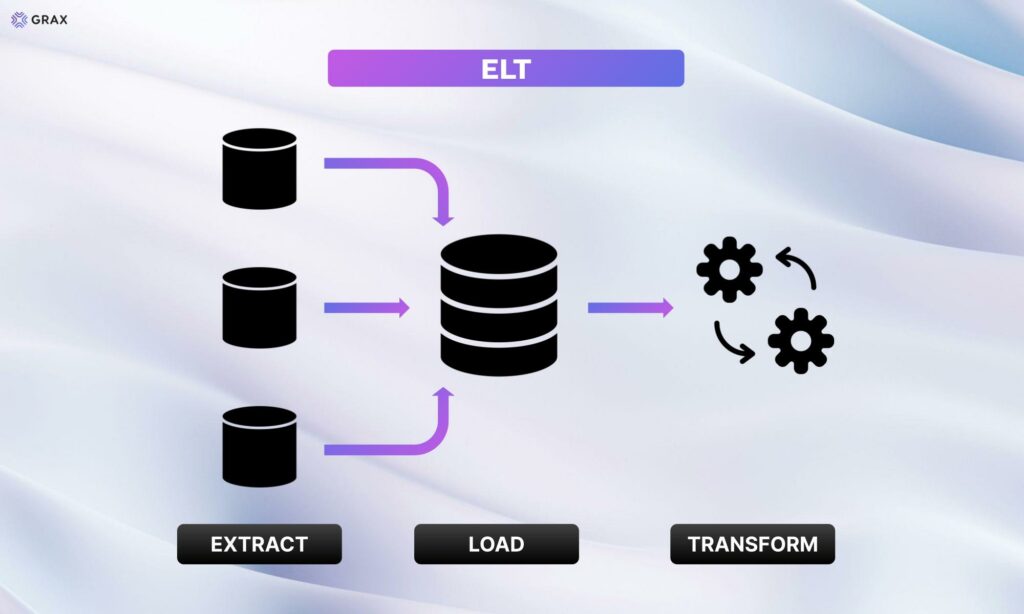

What is ELT?

ELT, or Extract, Load, Transform, is a variation of the traditional ETL method. It changes the order in which data is handled. This approach leverages the processing power and scalability of modern data warehouses to perform complex transformations more efficiently.

Process Flow:

- Extraction

This process is the same as ETL’s extraction. - Loading

This involves transferring the raw, unprocessed data into the target data warehouse or data lake. Data is loaded into the target system as-is. - Transformation

Raw data is transformed within the data warehouse or lake into a usable format for analysis and reporting.

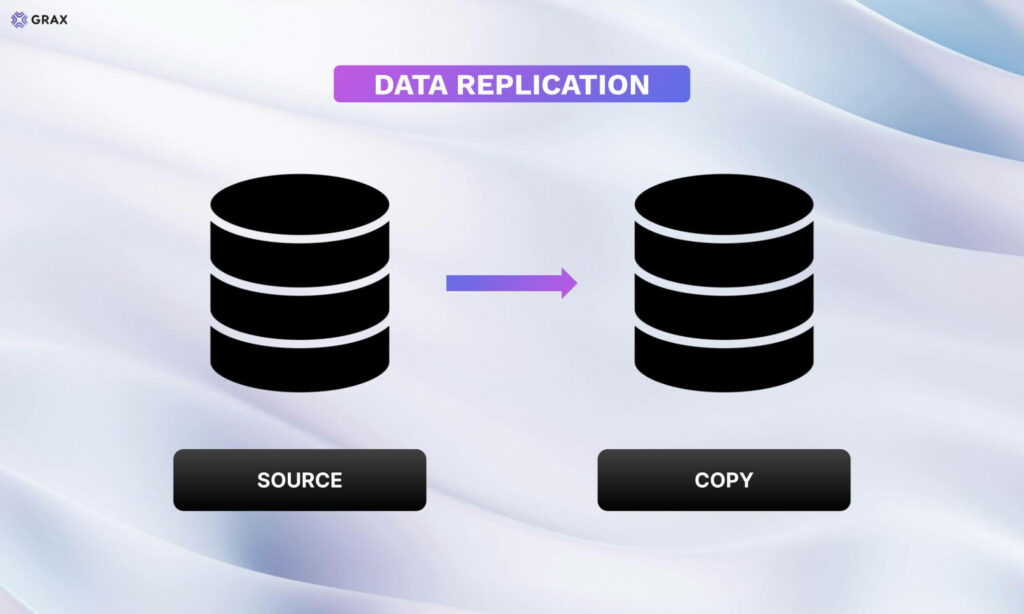

What is Data Replication?

Data replication copies data from one system to another in a format that mirrors the original. It provides an exact copy of all historical data and schema, up to the current time. This ensures data is consistently available across different systems.

Process Flow

- Source Data

Reads data from the source system, such as Salesforce. - Replication

Copies and synchronizes data with the target system.

Comparing ETLs, ELTs, and Data Replication

ETLs Pros and Cons

Pros:

- Control over Data Transformation

ETL allows for detailed data transformation before loading. This helps ensure data is cleaned, formatted, and aggregated in a way that meets the specific requirements of the target system and the business use case. - Data Quality

Since the transformation process includes data cleaning and validation, it helps in maintaining high data quality. - Established Technology with Robust Tools

ETL is a mature technology with a wide range of tools available, such as Talend, Informatica PowerCenter, and Apache NiFi. These tools offer extensive support and community resources.

Cons:

- Time-Consuming Transformation Process

The transformation step in ETL can be time-consuming, especially when dealing with large datasets. This can lead to delays in making the data available for analysis. - Resource-Intensive

ETL processes can require significant computational power and storage to perform transformations. This can increase the cost and complexity of managing the ETL infrastructure. - Less Flexible for Large-Scale Data Processing

ETL can struggle with scalability issues when handling very large volumes of data or real-time data streams.

ELTs Pros and Cons

Pros:

- Faster Processing

By loading data first and transforming it later, ELT can speed up the initial data ingestion process. This is beneficial when dealing with large volumes of data that need to be loaded quickly. - Scalability with Modern Data Architectures

ELT leverages the computational power and scalability of modern data warehouses, such as Snowflake and Google BigQuery. These platforms can handle large-scale data processing and complex transformations efficiently. - Suitable for Large Data Volumes

ELT is designed to handle big data environments, where the ability to process and transform vast amounts of data is crucial.

Cons:

- Requires a Powerful Data Warehouse

ELT relies on the target data warehouse to perform transformations. This means organizations need to invest in a robust and powerful data warehouse infrastructure, which can be costly. - Potential Data Quality Issues

Since data is loaded before it is transformed, there is a risk of loading unclean or inconsistent data into the data warehouse. This can lead to data quality issues that need to be addressed during transformation. - Dependence on the Target System’s Transformation Capabilities

If the data warehouse lacks advanced transformation features, it can limit your ability to perform complex data transformations.

Ready for a competitive advantage?

Check out our demo to see how GRAX can help you.

Data Replication vs ETLs and ELTs

While ETL and ELT are both powerful data integration methodologies, data replication offers distinct advantages in four key scenarios:

1. Simplified Management and Lower Cost

Data replication simplifies data management by automating the process of keeping data copies synchronized. This reduces the administrative overhead and complexity associated with managing data across different systems. As a result, it’s easier to ensure data consistency and integrity. You also minimize labor and system costs.

Comparison with ETLs and ELTs

- ETL: The ETL process involves complex transformations and scheduling of data movement. This requires significant administrative effort to manage and monitor. Ensuring data consistency and integrity across different systems can be challenging.

- ELT: While ELT reduces some complexity by leveraging the target system for transformations, it still requires careful management of the loading and transformation processes. Ensuring data consistency across large volumes of data can be complex and time-consuming.

2. Real-Time Data Availability

Data replication ensures data is consistently and continuously synchronized across multiple systems in real time. This means that any updates made to the source data are immediately reflected in the replicated copies, providing up-to-date information at all times.

Comparison with ETLs and ELTs

- ETL: The ETL process involves scheduled batch processing. This means data is only updated during predefined intervals. This can result in latency, with data potentially being hours or even days old, depending on the frequency of the ETL jobs.

- ELT: While ELT can handle larger data volumes more efficiently and potentially reduce some latency compared to ETL, it still typically operates on scheduled batch processes for loading and transformation. It does not guarantee real-time updates.

3. Data Reliability and Integrity

Data replication provides high availability by maintaining multiple copies of the data across different systems or locations. In the event of a system failure or disaster at one site, the replicated data ensures that operations can continue seamlessly from another site, minimizing downtime and data loss.

In addition, data replication solutions such as GRAX provide an exact copy of all historical data and schema. This is critical when dealing with data from applications like Salesforce. GRAX automatically and directly replicates any and all changes in the Salesforce schema. This includes everything from the addition or removal of fields, to new objects, changes in relationships, and more.

It ensures data is complete, accurate, and consistent without manual intervention. This eliminates the risk of errors that can occur during ETL and ELT data manipulation.

Comparison with ETLs and ELTs

Because ETL and ELT typically move data in batches, they don’t inherently provide high availability or redundancy. If a source system fails, the data in the target system may not be current. The recovery process can be complex and time-consuming.

Schema also is problematic. Users who modify a schema in a source application such as Salesforce, for instance by adding, renaming, or removing fields or objects, need to update the ETL or ELT processes that extract data. If you don’t, the extraction phase may not pull the correct data. This could result in errors due to missing or unexpected schema elements.

Changing schema can also disrupt transformation rules. To ensure integrity, you need to adjust transformation logic to handle changes in things like validation rules or field lengths.

4. Data Integration

GRAX automatically writes the real-time and historical data it stores in customer’s own cloud infrastructure into Parquet form. This makes data integration easier and more efficient. Because Parquet stores data in columns, it provides better compression and faster read times for analytical queries. Parquet’s columnar storage also means it can handle complex nested data more effectively.

Parquet is well-supported by all major data storage and data processing platforms. This means GRAX customers can easily pipe data into a central data lake or data warehouse, such as Snowflake. It can also easily feed analytics platforms such as Tableau, Microsoft Azure Synapse Analytics, AWS QuickSight, or PowerBI.

Comparison with ETLs and ELTs

Because of the complexities inherent in ETL and ELT, data quality and processing issues are a concern. Incomplete, delayed, or inconsistent data can lead to inaccurate and unreliable integrations.

Choosing the Right Approach

Specific business needs dictate the best data management method. ETL is suitable for scenarios requiring detailed transformations, while ELT could be beneficial for organizations that have robust data warehouse capabilities and big data requirements. GRAX’s data replication approach is ideal for real-time needs, historical analyses, and when data availability, integrity, and cost-effectiveness are paramount. Make sure you understand your business’ priorities before choosing your approach.

Ready to maximize outcomes?

Talk with our product experts to discover how.