About this talk

Watch this GRAX demo video to see how easy it is to take control and ownership of your Salesforce data with GRAX:

- Backup all your Salesforce orgs

- Keep archived Salesforce data 100% accessible in production

- Capture up to every single change

- Pipe and reuse your Salesforce data anytime, anywhere

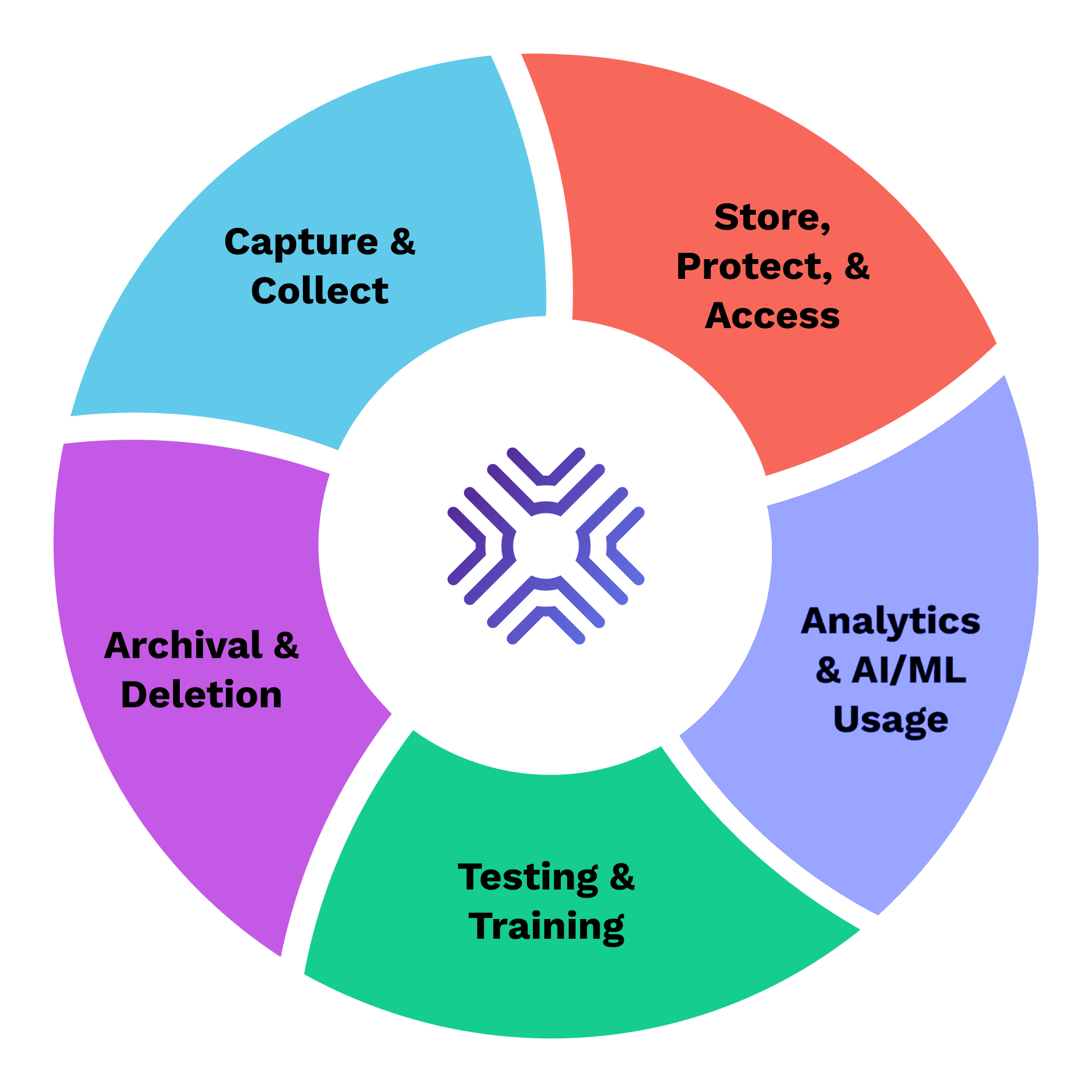

GRAX is the new way that businesses preserve, recover, and act on their historical data. GRAX captures an irrefutable, recoverable record of your Salesforce data, storing it in the customer’s own environment and making it available for analytics, data warehousing, AI/ML, and more. This approach creates a modern, unified data fabric that helps companies protect, understand and adapt to changes faster in their business.

Complete the form to watch the GRAX demo video of how our solution can effortlessly preserve, recover, manage, and analyze your historical Salesforce data - with no code required.

Transcript

ALEX DAMEROW: Hello and welcome. This is Alex Damerow with the GRAX sales team. In today's video, I'm going to be giving you a quick tour to the GRAX Data Lifecycle Management Platform for Salesforce. Let's get started.

At GRAX, we've taken the complexity, maintenance, and ongoing risk of human error associated with typical backup vendors and have fully automated this process for your business. Simply turn on auto backup. And with the click of a button, your entire Salesforce environment can be protected.

Out of the box, our auto backup process runs hourly and captures all incremental changes to your environment, including standard objects, custom objects, managed package objects, metadata, files, attachments, and more. Better yet, GRAX Auto Backup intelligently read your Salesforce schema every time it runs, meaning that as new managed packages are installed, new custom objects are created. Or any other changes that may occur, there is still no maintenance required. And you can rest assured that your environment is continually protected.

As you will see throughout the remainder of this video, one key feature of GRAX is its ability to make your data history accessible at all times. Here on the Auto Backup tab, your entire object list can be easily sorted and filtered to navigate to a record of your choice. Let's drill into the account object now.

As you can see, GRAX Auto Backup not only backs up your records, but intuitively maps out a records relational hierarchy, including all related children, files, and field values for easy review and interactivity. GRAX also tracks the lineage of your records so you can easily traverse the records' version history and also perform actions to a record directly within the GRAX UI.

With GRAX Time Machine, you now have the ability to track a record's entire version history live within your Salesforce production environment. By simply adding the GRAX Time Machine Lightning Web Components to any record, you can easily examine and compare previous versions of a record, live in production.

From this Lightning Web Component, you also have the ability to restore an entire record, or even a granular field value, back to a previous point in time with just a simple click. Best of all, since GRAX captures all field values, this means you can now better meet your business compliance requirements and operate with an unlimited field audit trail of your Salesforce records for an unlimited retention period.

When your organization is faced with costly data storage overages, declining Salesforce application performance, or the need to meet legal requirements for long-term data retention, GRAX provides the ability to purge data from Salesforce and efficiently store it off platform. Best of all, with GRAX, you're able to retain full access to this data, both within the GRAX UI and even live within your Salesforce production environment.

To execute an archive, simply press the New Archive button and select the data set you wish to remove from Salesforce. This data can be selected from a prebuilt template, SOQL query, Salesforce report, CSV, or list of record IDs. From there, choose your desired verification options. With just a few clicks, your data can be archived off of the Salesforce platform.

Next, let's look at what your archived data could look like live within your Salesforce production environment. By simply adding our customizable Lightning Web Component to any object, we can easily see the historical data related to a parent record. From here, business users can easily access a popup modal of the record and perform actions to the record based on their user permissions.

Whether it be from a malicious actor or simple human error, erroneous Salesforce deletions can severely impact your daily business operations. GRAX Delete Tracking resolves this concern by keeping a readily accessible log of all records which have been deleted from your Salesforce environment. Using GRAX Delete Tracking, you can easily identify records which have been deleted, navigate to a record, review the record, including its lineage, hierarchy, and field values, and easily restore them back to production with just a few clicks.

When a need arises for mass data restoration, simply navigate to the GRAX Restore tab and select New Restore. As you can see, GRAX provides two methods of mass recovering your Salesforce data. A Point-in-Time Restore allows users to update live Salesforce records to a previous point in time. Or you may also insert records which have been previously archived or deleted from your Salesforce environment. Let's take a look at Point-in-Time Restore now.

First, select your data source, supplying the record IDs which you need to recover. This can be in the form of a Salesforce report, CSV file, or list of record IDs. Once your data source has been selected, you're able to review the records retrieved.

Next, you're able to select specific fields and the point in time which you wish to revert the records back to. After designating your restore criteria, you are a simple click away from successfully recovering your data to a previous point in time.

Next, let's look at mass restoring data which has been previously archived or deleted from your Salesforce environment. Simply select the source of the records to be recovered, either by CSV file, which is easily available from the GRAX Search tab, or by supplying a list of record IDs.

From here, GRAX will process the object's hierarchy and provide an opportunity to preview the data set which is to be restored. Next, you are able to input possible field overrides or designate objects in the hierarchy which you would like to be skipped. Lastly, you are able to conduct a final review of your data set before executing your GRAX Restore with just a simple click.

One of the most strategic benefits of GRAX is that it can be fully deployed within your organization's cloud service environment. By choosing to take ownership of your data, you set the foundation for widespread data re-use. GRAX History Stream turns your backup data into an instant asset for your organization by automatically transforming your data for native ingestion across any modern data tool.

You can configure your history stream output by simply selecting which objects you wish to transform. And GRAX will automatically and continually deliver this data to your cloud storage bucket. From there, this data can be easily accessed by your organization's data teams for use in reporting, visualization, artificial intelligence, machine learning, and more.

That's all for today's tour through the GRAX Data Lifecycle Management Platform for Salesforce. If you have any questions, would like to receive a quote, or register for a free trial experience for your business, please reach us at sales@grax.com to learn more.