Relax At The Lakehouse

Ever wondered how to unlock the full potential of your Salesforce data for advanced analytics and AI? This blog post will guide you through building a robust Azure Data Lakehouse with GRAX, leveraging the power of Microsoft Fabric and OneLake. Discover how this architecture streamlines Salesforce data replication, accelerates insights, and utilizes the Medallion architecture for optimal data quality.

What is a Data Lakehouse?

A data lakehouse combines the storage flexibility and scalability of a data lake with the data management and analytics capabilities of a data warehouse. It aims to provide a unified platform for all types of data and analytics workloads.

Data lakes store raw, unstructured, and semi-structured data and utilize various processing engines like Spark. Data lakes are highly scalable and cost-effective, primarily used for data science and machine learning, and have limited data governance.

Data warehouses store structured data and use SQL queries for processing. Data warehouses are scalable but with limitations, have higher costs, and are mainly used for business intelligence and reporting. They have strong data governance.

Data lakehouses combine the features of both, allowing for the storage of raw, structured, and semi-structured data. They support SQL queries and other data processing engines. Data lakehouses offer flexible data quality control, high scalability, and cost-effectiveness for large data volumes. They support data science, machine learning, BI, and reporting and have improved data governance compared to data lakes.

Building an Azure Data Lakehouse

In general, the different lakehouse products all share some common features and functionality. They have tooling to support queries of the data in different ways and typically support a few different storage mechanisms. Although most vendors talk about the lakehouse as a product, it is often helpful to think of the lakehouse as a software stack. Different products on different layers, with each layer serving different needs or different parts of the business.

Unifying this stack is the product architecture, which guides how the layers are used and data is moved within the lakehouse. Different products have different reference architectures, with Azure Data Lakehouse the recommended approach from Microsoft is to use the Medallion Architecture built on Microsoft Fabric.

What is Microsoft Fabric?

When building a data lakehouse in Azure, the recommended approach is to use Microsoft Fabric. Microsoft Fabric is Microsoft’s enterprise-ready, end-to-end analytics platform. The way it works is you buy “capacity” which is essentially the amount of compute you get at any given time, and then can build out your datalake and sundry jobs within. It simplifies the necessary components (you only need one) and it brings all the pieces together. Within Fabric OneLake is the data lake that is the foundation of all the Fabric workloads. Every step should be getting data in, out, or transforming the data for consumption.

What is the Medallion Architecture?

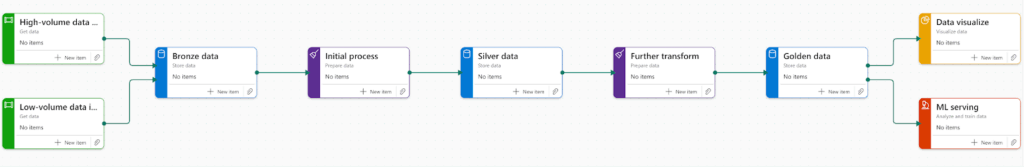

The recommended architecture for Azure data lakehouses built on Fabric is the medallion architecture. This architecture logically takes the data from bronze to silver to gold layers, with each layer representing higher data quality.

Contextualizing the Medallion Architecture with GRAX

The following is the reference architecture, but customers will modify and customize the layers to support their specific needs and existing architecture. GRAX Data Lake is not prescriptive on the layers, so it will fit into either the reference architecture or a customized version. The key with adopting an architecture is to make sure it makes sense for your organization, your specific business needs, and that it provides room for growth as those needs grow.

Bronze Layer – Raw Data Ingestion

The bronze layer of the medallion architecture is where the raw data first enters the lakehouse. At this stage, the data remains in its original format. Fortunately for data lakehouse architects, GRAX provides the historical data already in parquet. This means the lake house can easily consume it and use it. With GRAX as the source for the Salesforce data in the bronze layer, your company will have the most complete replica of your current and historical salesforce data in your data lake.

Silver Layer – Cleanse and augment

The silver layer is the validating layer of the lakehouse. It’s where data will be validated and refined. Typically this is where the validation rules are placed and where gates exist to deduplicate, remove null values, and generally make sure the data is clean. An architect using GRAX for their Salesforce data has an upper hand here. The data is already going to be in good condition and will also have some type conversions. This simplifies the data pipeline required while also reducing the ongoing processing.

Gold Layer – Optimize and Distribute

The gold layer is the third layer within the lakehouse. It is the enrichment layer, where the data will undergo further processing to align with business requirements. This could include rollups, aggregation, enriching from external sources, or combining it with data from other systems of record. Sometimes there will be one gold layer, other times there may be several gold layers as per the data requirements and needs of the business. At this stage the data is ready for use.

How GRAX Enhances the Data Lakehouse Experience for Salesforce Customers

GRAX customers are miles ahead when building their data lakehouse strategy because of the way GRAX replicates their data into their cloud. A common question is, “Can’t I use my legacy ETL tooling or the Salesforce endpoint built into Fabric?” This is like asking why own a stove when you could build a fire in the backyard to cook. Sure, you could do that, but it’s a lot more work and ongoing maintenance to get the exact same result. Likewise, if you’re using traditional ETL or import tools, there is a lot of maintenance involved, mostly around syncing the ETL jobs to the Salesforce schema.

A common support task within the integration space is updating integrations to reflect new tables or changed schemas (new/changed columns), this work takes time and often means there is a lag between when there is a schema change in Salesforce and when that’s reflected in the data lakehouse. Since GRAX automatically handles new Salesforce objects and changes to your metadata, the ingestion process is greatly simplified.

Data Transformation with Azure

One of the differentiators of Fabric and an Azure Data Lakehouse is also one of things that trips people up initially – the sheer array of options when moving or transferring data. As of now (May 2025) there are nine different ways to transform your data. The first question people ask is, “Which way do I do it?”. Fortunately, the answer is “Whichever way works the best”. Right now the following options are available:

- Apache Airflow job

- Azure Data Factory

- Copy job

- Data pipeline

- Dataflow Gen1

- Dataflow Gen2

- Eventstream

- Notebook

- Spark Job Definition

To know the details of each, make sure to check the Azure docs: https://learn.microsoft.com/en-us/fabric/data-factory/.

Microsoft also has a few decision guides to help narrow down the right choice (although it hasn’t been updated to reflect all of the options): https://learn.microsoft.com/en-us/fabric/fundamentals/decision-guide-pipeline-dataflow-spark

As always, keep it simple, not just for you, but future developers and maintainers. There is no reason to build out a script in python when a copy job will do. Likewise, there is no reason to create a huge tangle of temp tables and T-SQL to build a rollup when a python script can solve it in 20 lines of code.

Putting it all together

GRAX simplifies and reduces the lift to bring your Salesforce data (and its history) into your Azure data lakehouse. This enables faster time to value for your lakehouse investment and gets your downstream teams consuming Salesforce and data lakehouse data faster. By leveraging Microsoft Fabric, One Lake, and GRAX, all of your data is accessible by your whole enterprise without complicated ETL.

Ready to build your own Salesforce-powered lakehouse?

FAQs

What is a Data Lakehouse?

A data lakehouse combines the storage flexibility and scalability of a data lake with the data management and analytics capabilities of a data warehouse, providing a unified platform for various data and analytics workloads.

What is the Medallion Architecture in Azure?

The Medallion Architecture, used in Azure data lakehouses, organizes data into bronze (raw), silver (validated), and gold (enriched) layers, progressively increasing data quality and readiness for use.

How Does GRAX Integrate with Microsoft Fabric?

GRAX integrates with Microsoft Fabric by providing Salesforce data in parquet format, ready for ingestion into the bronze layer of the Medallion Architecture, simplifying data integration.

Why not use legacy ETL tools or the Salesforce endpoint built into Fabric for a data lakehouse?

While possible, legacy ETL tools often require more maintenance, especially for syncing with Salesforce schema changes. Similarly, using traditional ETL or the built-in Salesforce endpoint can involve significant upkeep to align with schema updates. GRAX simplifies this process by automatically handling new Salesforce objects and metadata changes, reducing maintenance and ensuring data is current within the data lakehouse.

What are the Benefits of Combining GRAX with Azure?

Combining GRAX with Azure simplifies Salesforce data ingestion and enhances the data lakehouse experience by reducing maintenance, ensuring data quality, and accelerating downstream data consumption.